… properly, or at least ‘better’.

… properly, or at least ‘better’.

In the past I have provided ranked lists of journals in conservation ecology according to their ISI® Impact Factor (see lists for 2008, 2009, 2010, 2011, 2012 & 2013). These lists have proven to be exceedingly popular.

Why are journal metrics and the rankings they imply so in-demand? Despite many people loathing the entire concept of citation-based journal metrics, we scientists, our administrators, granting agencies, award committees and promotion panellists use them with such merciless frequency that our academic fates are intimately bound to the ‘quality’ of the journals in which we publish.

Human beings love to rank themselves and others, the things they make, and the institutions to which they belong, so it’s a natural expectation that scientific journals are ranked as well.

I’m certainly not the first to suggest that journal quality cannot be fully captured by some formulation of the number of citations its papers receive; ‘quality’ is an elusive characteristic that includes inter alia things like speed of publication, fairness of the review process, prevalence of gate-keeping, reputation of the editors, writing style, within-discipline reputation, longevity, cost, specialisation, open-access options and even its ‘look’.

It would be impossible to include all of these aspects into a single ‘quality’ metric, although one could conceivably rank journals according to one or several of those features. ‘Reputation’ is perhaps the most quantitative characteristic when measured as citations, so we academics have chosen the lowest-hanging fruit and built our quality-ranking universe around them, for better or worse.

I was never really satisfied with metrics like black-box Impact Factors, so when I started discovering other ways to express the citation performance of the journals to which I regularly submit papers, I became a little more interested in the field of bibliometrics.

In 2014 I wrote a post about what I thought was a fairer way to judge peer-reviewed journal ‘quality’ than the default option of relying solely on ISI® Impact Factors. I was particularly interested in why the new kid on the block — Google Scholar Metrics — gave at times rather wildly different ranks of the journals in which I was interested.

So I came up with a simple mean ranking method to get some idea of the relative citation-based ‘quality’ of these journals.

It was a bit of a laugh, really, but my long-time collaborator, Barry Brook, suggested that I formalise the approach and include a wider array of citation-based metrics in the mean ranks.

Because Barry’s ideas are usually rather good, I followed his advice and together we constructed a more comprehensive, although still decidedly simple, approach to estimate the relative ranks of journals from any selection one would care to cobble together. In this case, however, we also included a rank-placement resampler to estimate the uncertainty associated with each rank.

I’m pleased to announce that the final version1 is now published in PLoS One2.

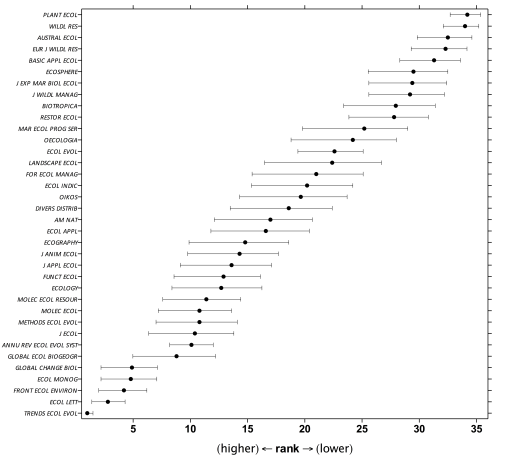

Without going into too much detail (you can read all about it in our paper), our approach took five of the most well-known, least inter-correlated and easily accessible citation-based metrics (Impact Factor, Immediacy Index, Google Scholar 5-year h-index, Source-Normalised Impact per Paper & SCImago Journal Rank), ranked them, then calculated the mean rank per journal.

We also implemented a resampling approach that provides the uncertainty of each journal’s rank within the sample.

My use of the terms relative and within the sample are important here — journal metrics should only ever be considered as indices of relative, average-citation performance from within a discipline-specific or personally selected sample of journals. By itself, the value of a particular journal citation metric is largely meaningless.

As example lists, we looked at 100 Ecology, 100 Medicine and 50 Multidisciplinary journals and applied our ranking method. We also include some samples of highly specialised-discipline journals in Marine Biology & Fisheries and Obstetrics & Gynaecology. All these ranks were based on 2013 metric values (because the 2014 values had not yet been released when I started the analysis).

We even provided the results of a small survey I ran last year to find out what ecologists thought of particular journals (many belated thanks to all who participated). I wanted to get more to the heart of the ‘reputation’ idea and compare this to the combined ranks3. It turns out that the correlation between what we think about journals and their composite citation-based ranks is pretty good (Spearman’s ρ = 0.67-0.83). However, the deviations from the rank expectations are probably more interesting (see Fig. 2B in the new paper).

I know what you’re thinking though — “Hey Corey, what are the journal ranks in conservation & ecology based on the 2014 metrics?”. Despite you being able to do this by going online and getting the values yourself, and using the R script we provided with the paper, I know most of you couldn’t be arsed to do it.

So I’ve taken the liberty of ranking journals from a mixed list of ecology, conservation and multidisciplinary disciplines that most likely cover the gamut of venues the CB.com readership might be interested in (you can download the raw data here). I make no claims that this list is comprehensive or representative, so if you don’t like my choices or want to include different journals, you’ll just have to do it yourself.

Now, a long list like that sort of goes against the philosophy of ranking journals in the first place. Ideally, the list should be a lot smaller so that the relative ranks start to make sense at a practical level.

For example, here is the ranking of 36 Ecology-only journals from that larger list (again, this is not comprehensive):

And here is the ranking for 21 Conservation-only journals (you might quibble with what I call ‘conservation-only’ here):

While these are informative, they still don’t really represent the kind of information I think I need when deciding where to send a manuscript. You can, of course, compile any list of journals you want, but below I provide two more example sets.

The top panel of the figure below shows the relative ranking of four ‘review’ journals in ecology/conservation. The bottom panel shows six journals where one might consider sending a manuscript with a ‘biogeography’ theme (I threw PLoS One in there as a multidisciplinary back-up option).

I think you get the picture of what sort of things you can do with the new method, and of course, it’s not limited to any particular field at all. So, go crazy! Get your own lists together and start ranking!

—

1I originally released the pre-print when I published this post; I don’t normally release pre-prints for blog posts, but given that (i) the paper was to be published soon in an open-access journal, (ii) I had already paid the open-access fee (way to bloody high, in my opinion), and (iii) Nature recently reported that scientists should be more inclined to post pre-prints, I said “fuck it” and decided to release it early.

2I hope the irony of the publishing venue didn’t escape you. PLoS One ranked consistently low in nearly all sample journal lists we analysed. The reason Google Scholar’s h-index ranks it so highly is because that metric is inflated by high publication output (i.e., many papers produced annually). Once you control for this, the h-index drops to be more in line with PLoS One‘s Impact Factor.

3I fully admit that there is some circularity here; people are subconsciously influenced by citation-based metrics already.

[…] Now that Clarivate, Google, and Scopus have just lately printed their respective journal quotation scores for 2021, I can now current — for the 14th 12 months operating on ConvervationBytes.com — the 2021 conservation/ecology/sustainability journal ranks primarily based on my journal-ranking technique. […]

LikeLike

[…] Now that Clarivate, Google, and Scopus have recently published their respective journal citation scores for 2021, I can now present — for the 14th year running on ConvervationBytes.com — the 2021 conservation/ecology/sustainability journal ranks based on my journal-ranking method. […]

LikeLike

[…] is the 13th year in a row that I’ve generated journal ranks based on the journal-ranking method we published several years […]

LikeLike

[…] reasons we developed a multi-index rank are many (summarised here), but they essentially boil down to the following […]

LikeLike

[…] the last 12 years and running now, I’ve been generating journal ranks based on the journal-ranking method we published several years ago. Since the Google journal h-indices were just released, here […]

LikeLike

[…] first acknowledge that just like journal ranks, a researcher’s citation performance should only be viewed as relative to a comparison group. […]

LikeLike

[…] has become my custom (11 years and running), and based on the journal-ranking method we published several years ago, here are the new 2018 ranks for (i) 90 ecology, conservation and […]

LikeLike

[…] few years ago we wrote a bibliometric paper describing a new way to rank journals, and I still think it is one of the […]

LikeLike

[…] of all 544 papers, and then looked at the relationships between rank and various aspects, such as journal Impact Factor, citation rate, article age, and type, field, or approach. The interesting relationships that […]

LikeLike

[…] year we wrote a bibliometric paper describing a new way to rank journals, which I contend is a fairer […]

LikeLike

[…] rejection is final no matter what you could argue or modify. So your only recourse is move on to a lower-ranked journal. If you consistently submit to low-ranked journals, you would obviously receive far fewer […]

LikeLike

[…] in February I wrote about our new bibliometric paper describing a new way to rank journals, which I still contend is a fairer […]

LikeLike

Interesting work and nicely done. I was slightly disappointed to see PeerJ didn’t make the list. Cheaper than some other open access journals with plenty of ecology and conservation contributions.

LikeLiked by 1 person

I promised myself I wouldn’t do this — it’s easy to add/subtract any journal you wish — but in your case I’ll make an exception. If you add PeerJ to the list of 75 journals I listed in the first figure, it falls between AUSTRAL ECOL and J INSECT CONSERV (rank: 64.4—70.5)

LikeLiked by 2 people

Nice article Corey! It would be interesting to see how your results match up with the ranking of ecological journals I did using the H-index back in 2007 (citation with link below). I also ranked according to the m-index to account for journal differences in the number of publications. Again, a nice contribution to the literature. Thanks for sharing.

Cheers,

Julian

Olden, J.D. 2007. How do ecological journals stack-up? Ranking of scientific quality according to the h index. Écoscience 14:370-376.

Click to access Ecoscience_2007.pdf

LikeLike

Hi Corey,

This is a really nice concept and good to see that impact factor may be losing it’s grip as the sole measure for how we measure scientific impact. The world had moved on – at my university, impact factors can no longer be considered in the process of evaluating academic promotions – about time! However, in this age of the “Innovation statement” and long standing calls for scientists to get better at engaging with the real world, I am still deeply concerned that we place far too much emphasis on ‘citations’, and I’m sceptical of any evaluation approach that centres its weight on metrics of a journal’s ‘prestige’ or ‘value’ – regardless of how these are measured.

Speaking as someone who spent ten years in a conservation agency, high impact research is work that is used to make informed decisions about conservation policy and management. The journal the work is published in is largely irrelevant. Also consider that managers often don’t have the luxury of keeping up with the literature, and most agencies don’t have journal access or libraries. I can guarantee that local landholders and industry aren’t reading the latest edition of Conservation Biology either, and don’t know or care which the most prestigious journals are.

In this reality, high impact research is information that stakeholders (1) know about, (2) can access, and (3) can use for conservation. Combined, these factors mean that the journal’s media credentials and access policies are probably much more important than any metric of its ‘value’ or ‘impact’. When I’m evaluating the impact of someone’s work, I ask them for evidence of their engagement with managers, NGOs, industry, and stakeholders; and evidence for how their work has been used. To me, an applicant that sits on a stakeholder advisory board and has had their work cited in a regulatory impact statement or conservation plan, has demonstrated much higher ‘impact’ than another applicant that lacks these achievements, but has lots of publications in high ranking journals.

I don’t have a problem with choosing to publish in high impact journals, but I have a large problem with using a scientists’ publication record as the primary measure of their impact in conservation. So yes, use metrics to inform decisions about where we send our manuscripts – but if we think our research has real world applications, we need to put more thought into how to get our research to the people who need to know about it. And as conservation scientists, we need to invest more energy in identifying useful metrics to measure research impact in real world conservation, not just about how our papers are cited by other scientists in their papers.

I appreciate that these comments are about the broader context of scientific impact, and I think what you’ve come up with is far better than blind reliance on the almighty IF, or relying on any single citation metric. But just wanted to float the idea that if we’re talking about impact, there are other types of ‘impact factors’ we need to consider as well.

Just my $0.02.

cheers

Andrew

LikeLiked by 1 person

I agree Andrew.

We should consider a metric on legislative impact for conservation. If you can figure a way to get the data we can do the analytics here at JMP software (SAS).

Corey, over to you?

Zoe.

LikeLike