Many non-Australians might not know it, but Australia is overrun with feral vertebrates (not to mention weeds and invertebrates). We have millions of pigs, dogs, camels, goats, buffalo, deer, rabbits, cats, foxes and toads (to name a few). In a continent that separated from Gondwana about 80 million years ago, this allowed a fairly unique biota to evolve, such that when Aboriginals and later, Europeans, started introducing all these non-native species, it quickly became an ecological disaster. One of my first posts here on ConservationBytes.com was in fact about feral animals. Since then, I’ve written quite a bit on invasive species, especially with respect to mammal declines (see Few people, many threats – Australia’s biodiversity shame, Shocking continued loss of Australian mammals, Can we solve Australia’s mammal extinction crisis?).

Many non-Australians might not know it, but Australia is overrun with feral vertebrates (not to mention weeds and invertebrates). We have millions of pigs, dogs, camels, goats, buffalo, deer, rabbits, cats, foxes and toads (to name a few). In a continent that separated from Gondwana about 80 million years ago, this allowed a fairly unique biota to evolve, such that when Aboriginals and later, Europeans, started introducing all these non-native species, it quickly became an ecological disaster. One of my first posts here on ConservationBytes.com was in fact about feral animals. Since then, I’ve written quite a bit on invasive species, especially with respect to mammal declines (see Few people, many threats – Australia’s biodiversity shame, Shocking continued loss of Australian mammals, Can we solve Australia’s mammal extinction crisis?).

So you can imagine that we do try to find the best ways to reduce the damage these species cause; unfortunately, we tend to waste a lot of money because density reduction culling programmes aren’t usually done with much forethought, organisation or associated research. A case in point – swamp buffalo were killed in vast numbers in northern Australia in the 1980s and 1990s, but now they’re back with a vengeance.

Enter S.T.A.R. – the clumsily named ‘Spatio-Temporal Animal Reduction’ [model] that we’ve just published in Methods in Ecology and Evolution (title: Spatially explicit spreadsheet modelling for optimising the efficiency of reducing invasive animal density by CR McMahon and colleagues).

Enter S.T.A.R. – the clumsily named ‘Spatio-Temporal Animal Reduction’ [model] that we’ve just published in Methods in Ecology and Evolution (title: Spatially explicit spreadsheet modelling for optimising the efficiency of reducing invasive animal density by CR McMahon and colleagues).

This little Excel-based spreadsheet model is designed specifically to optimise the culling strategies for feral pigs, buffalo and horses in Kakadu National Park (northern Australia), but our aim was to make it easy enough to use and modify so that it could be applied to any invasive species anywhere (ok, admittedly it would work best for macro-vertebrates).

The application works on a grid of habitat types, each with their own carrying capacities for each species. We then assume some fairly basic density-feedback population models and allow animals to move among cells. We then hit them virtually with a proportional culling rate (which includes a hunting-efficiency feedback), and estimate the costs associated with each level of kill. The final outputs give density maps and graphs of the population trajectory.

The application works on a grid of habitat types, each with their own carrying capacities for each species. We then assume some fairly basic density-feedback population models and allow animals to move among cells. We then hit them virtually with a proportional culling rate (which includes a hunting-efficiency feedback), and estimate the costs associated with each level of kill. The final outputs give density maps and graphs of the population trajectory.

We’ve added a lot of little features to maximise flexibility, including adjusting carrying capacities, movement rates, operating costs and overheads, and proportional harvest rates. The user can also get some basic sensitivity analyses done, or do district-specific culls. Finally, we’ve included three optimisation routines that estimate the best allocation of killing effort, for both maximising density reduction or working to a specific budget, and within a spatial or non-spatial context.

Our hope is that wildlife managers responsible for safeguarding the biodiversity of places like Kakadu National Park actually use this tool to maximise their efficiency. Kakadu has a particularly nasty set of invasive species, so it’s important those in charge get it right. So far, they haven’t been doing too well.

You can download the Excel program itself here (click here for the raw VBA code), and the User Manual is available here. Happy virtual killing!

P.S. If you’re concerned about animal welfare issues associated with all this, I invite you to read one of our recent papers on the subject: Convergence of culture, ecology and ethics: management of feral swamp buffalo in northern Australia.

![]() C.R. McMahon, B.W. Brook,, N. Collier, & C.J.A. Bradshaw (2010). Spatially explicit spreadsheet modelling for optimising the efficiency of reducing invasive animal density Methods in Ecology and Evolution : 10.1111/j.2041-210X.2009.00002.x

C.R. McMahon, B.W. Brook,, N. Collier, & C.J.A. Bradshaw (2010). Spatially explicit spreadsheet modelling for optimising the efficiency of reducing invasive animal density Methods in Ecology and Evolution : 10.1111/j.2041-210X.2009.00002.x

Albrecht, G., McMahon, C., Bowman, D., & Bradshaw, C. (2009). Convergence of Culture, Ecology, and Ethics: Management of Feral Swamp Buffalo in Northern Australia Journal of Agricultural and Environmental Ethics, 22 (4), 361-378 DOI: 10.1007/s10806-009-9158-5

Bradshaw, C., Field, I., Bowman, D., Haynes, C., & Brook, B. (2007). Current and future threats from non-indigenous animal species in northern Australia: a spotlight on World Heritage Area Kakadu National Park Wildlife Research, 34 (6) DOI: 10.1071/WR06056

Although I’ve

Although I’ve

Today’s post covers a neat little review just published online in

Today’s post covers a neat little review just published online in

We do a lot in our lab to get our research results out to a wider community than just scientists – this blog is just one example of how we do that. But of course, we rely on the regular media (television, newspaper, radio) heavily to pick up our media releases (see

We do a lot in our lab to get our research results out to a wider community than just scientists – this blog is just one example of how we do that. But of course, we rely on the regular media (television, newspaper, radio) heavily to pick up our media releases (see

I love these sorts of experiments. Ecology (and considering conservation ecology a special subset of the larger discipline) is a messy business, mainly because ecosystems are complex, non-linear, emergent, interactive, stochastic and meta-stable entities that are just plain difficult to manipulate experimentally. Therefore, making inference of complex ecological processes tends to be enhanced when the simplest components are isolated.

I love these sorts of experiments. Ecology (and considering conservation ecology a special subset of the larger discipline) is a messy business, mainly because ecosystems are complex, non-linear, emergent, interactive, stochastic and meta-stable entities that are just plain difficult to manipulate experimentally. Therefore, making inference of complex ecological processes tends to be enhanced when the simplest components are isolated. I just returned from a week-long scientific mission in China sponsored by the

I just returned from a week-long scientific mission in China sponsored by the

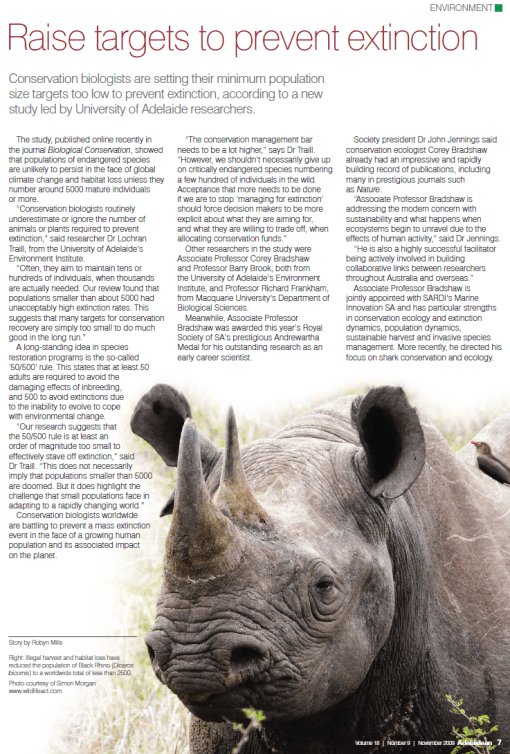

Ah, it doesn’t go away, does it? Or at least, we won’t let it.

Ah, it doesn’t go away, does it? Or at least, we won’t let it. I’m going to do a double review here of two papers currently online in

I’m going to do a double review here of two papers currently online in